3 mins of Machine Learning: Multivariate Gaussian Classifer

Goal

The goal for this post is to introduce and to write two parametric classifiers by modeling each class’s conditional

distribution as multivariate Gaussians with (a) full covariance matrix and (b) diagonal covariance matrix . numpy will be the only package allowed to use since I decide to code from the ground.

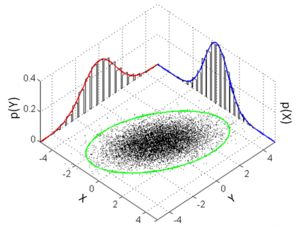

Multivariate Gaussian Classifer

As before we use Bayes’ theorem for classification, to relate the probability density function of the data given the class to the posterior probability of the class given the data. Let’s consider d-dimensional data x from class C modelled using a multivariate Gaussian with mean and variance . Using Bayes’ theorem we can write:

The log likelihood is:

And we can write the log posterior probability:

Idea

By using the training data, I will need to compute the maximum likelihood estimate of the class prior probabilities and the class conditional probabilities based on the maximum likelihood estimates of the mean and the (full/diagonal) covariance for each class . The classification will be done based on the following discriminant function:

Code

The code can be divided into 3 parts:

fit(self,X,y,diag): the inputs (X; y) are respectively the feature matrix and class labels, and diag is boolean (TRUE or FALSE) which indicates whether the estimated class covariance matrices should be a full matrix (diag=FALSE) or a diagonal matrix (diag=TRUE).

predict(X): the input X is the feature matrix corresponding to the test set and the output should be the predicted labels for each point in the test set.

_init_ (self,k,d): It initialize the parameters for each class to be uniform prior, zero mean, and identity covariance.

Here is the code

| import numpy as np | |

| import math | |

| class MultiGaussClassify: | |

| def __init__(self, k, d, diag="TRUE"): | |

| self.k = k | |

| self.d = d | |

| self.prior = [1 / self.k] * k | |

| self.mu = [[0 for _ in range(self.d)] for _ in range(self.k)] | |

| self.sigma = [np.identity(self.d) for _ in range(self.k)] | |

| self.diag = diag | |

| self.class_list = [] | |

| def fit(self, X, y): | |

| # prior | |

| self.class_list, counts = np.unique(y, return_counts=True) | |

| self.prior = counts / len(y) | |

| # print("prior") | |

| # print(self.prior) | |

| class_index = [] | |

| # mu | |

| for i in range(self.k): | |

| index = np.argwhere(y == self.class_list[i]) | |

| selected_row = X[index, :] | |

| class_index.append(list(index[:, 0])) # index is a vector, this will change it to a 1-D list | |

| self.mu[i] = np.sum(selected_row, axis=0) / counts[i] | |

| # print("mu") | |

| # print(self.mu) | |

| # print("class_index_list") | |

| # print(class_index) | |

| di = np.diag_indices(self.d) | |

| # sigma, check if we want to use diagonal or not | |

| if self.diag == "TRUE": | |

| for i in range(self.k): | |

| selected_row = X[class_index[i], :] | |

| mu_matrix = np.repeat(self.mu[i], selected_row.shape[0], axis=0) | |

| diff = selected_row - mu_matrix | |

| diag = np.diag(np.matmul(diff.T, diff)) / counts[i] | |

| self.sigma[i][di] = diag | |

| else: | |

| for i in range(self.k): | |

| selected_row = X[class_index[i], :] | |

| mu_matrix = np.repeat(self.mu[i], selected_row.shape[0], axis=0) | |

| diff = selected_row - mu_matrix | |

| cov = np.matmul(diff.T, diff) | |

| self.sigma[i] = cov / counts[i] | |

| # checking if sigma can be invert or not | |

| for i in range(self.k): | |

| if np.linalg.det(self.sigma[i]) == 0: | |

| epsilon = 1e-9 | |

| eps = np.identity(self.d) * epsilon | |

| self.sigma[i] = self.sigma[i] + eps | |

| # print("sigma") | |

| # print(self.sigma) | |

| def predict(self, X): | |

| n = X.shape[0] | |

| pred_list = np.zeros((n, self.k)) | |

| pred = np.zeros(n) | |

| for j in range(self.k): | |

| a = math.log(np.linalg.det(self.sigma[j])) | |

| b = np.linalg.inv(self.sigma[j]) | |

| c = math.log(self.prior[j]) | |

| for i in range(X.shape[0]): | |

| part = X[i, :] - self.mu[j] | |

| pred_list[i, j] = -1 / 2 * a - 1 / 2 * np.linalg.multi_dot( | |

| [part, b, part.T]) + c | |

| for i in range(X.shape[0]): | |

| pred[i] = self.class_list[int(np.argmax(pred_list[i, :]))] | |

| # print(pred_list) | |

| return pred |

Performance

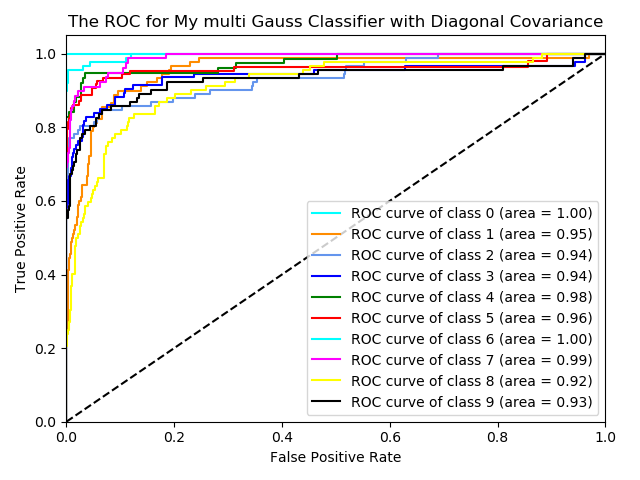

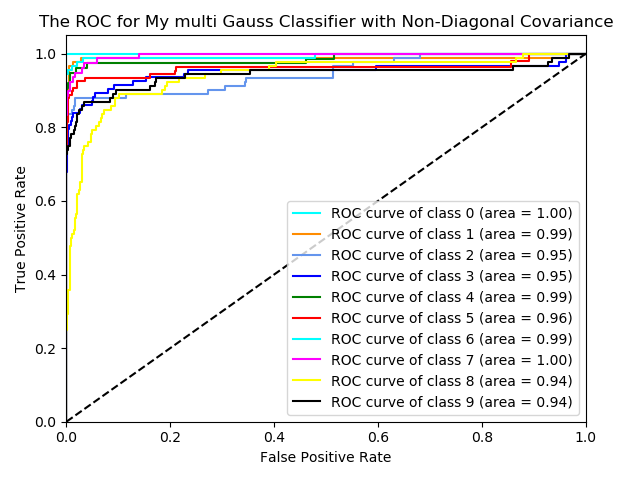

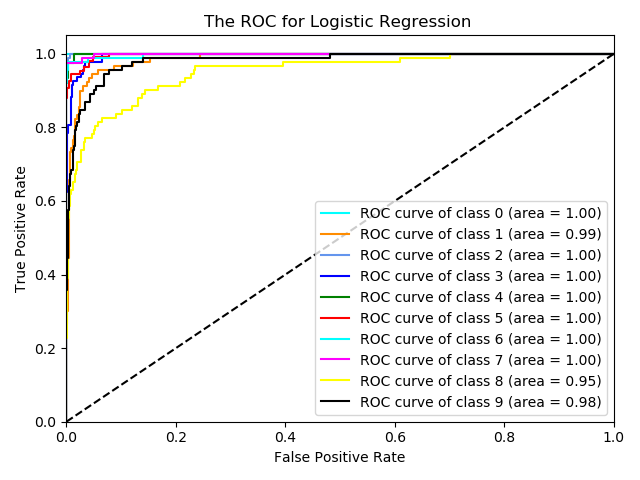

For checking the performance of this classifier, I use the digits dataset from sklearn. Also, I use logistic regression for comparison

Here are the ROC curves for three classifiers.

From the graphs, it looks like the classifier with the Non-diagonal covariance has better performance than the diagonal one. It means some features are dependent among all 64 features. Also, the logistic regression has a relatively better performance than the Multivariate Gaussian Classifer.